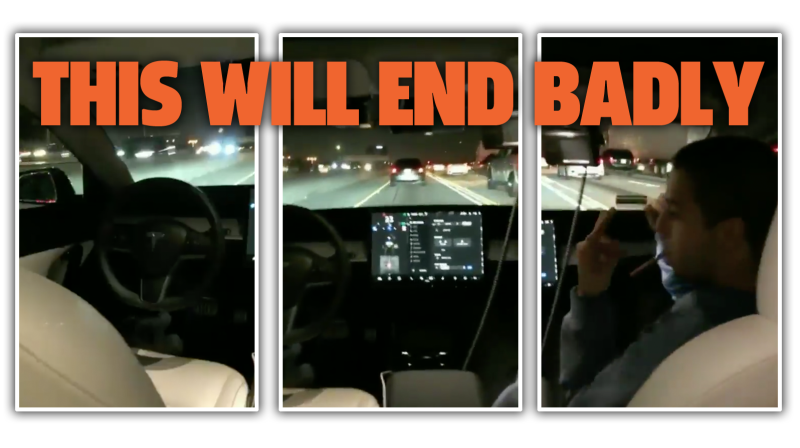

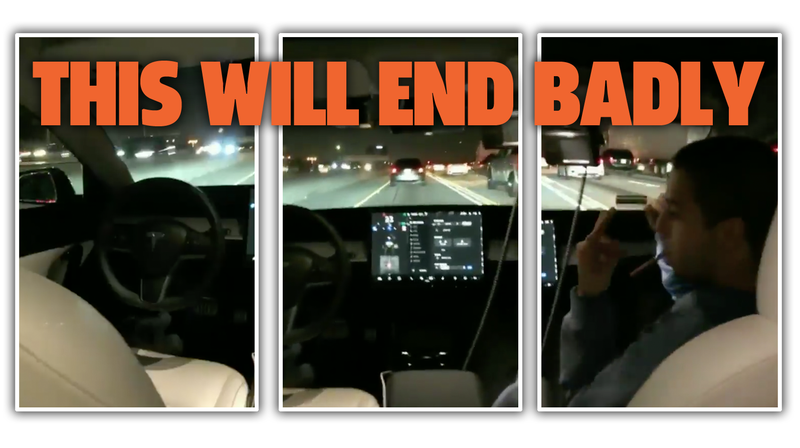

Full disclosure: I think all Level 2 semi-autonomy systems—that is, every sort of partial self-driving system you can get in a car today—are stupid. Not because the technology is necessarily bad, but that these systems, like Tesla’s Autopilot, are just not compatible with how human brains work. If you don’t believe me, please stare gape-mouthed at this video of a noted internet idiot’s new Tesla Model 3 driving itself on Autopilot with no one in the driver’s seat.

Here’s the video, in the reply to this tweet:

That Tesla Model 3 Performance is owned by Alex Choi, famous for his exoskeleton-roll caged Lamborghini Huracan among other things, I guess, and what’s going on in this video is deeply, lavishly stupid.

It’s stupid on Alex Choi’s part (who appears to be videoing from the back seat), whoever that guy is in the passenger seat, and also stupid on Tesla’s part for selling a Level 2 semi-autonomous system with descriptions like “full self-driving capability” which mislead consumers into thinking that pulling shit like this is somehow okay.

Let’s just be absolutely clear here: this is not, in any way, okay. Tesla’s Autopilot requires someone to be ready to take over driving at a moment’s notice; if that happens while you’re in the passenger seat or back seat, you’re boned, along with anyone unfortunate enough to be around you, caught in your sphere of idiot-bonery.

Advertisement

The video is only eight seconds long, and Tesla’s Autopilot reminds you to keep your hands on the wheel every 15 to 20 seconds.So, that leaves enough time to shoot an idiotic video like this.

It’s strange Tesla isn’t checking the sensor in the seat (the one almost all cars have to decide if someone’s in the seat and if so, to beep at you and illuminate the seat belt warning light) as a failsafe to keep Autopilot from being engaged if the driver’s seat is unoccupied. That should be pretty damn easy to implement, and all the hardware to do so is already in the car.

So why aren’t they doing that? That would keep dangerous bullshit like this from happening.

Advertisement

Videos like this, or peoplesleeping at the wheel of the Autopilot-engaged Teslas should be a big fat wake-up call that these systems are way too easy to abuse by determined or sleepy idiots, and sooner or later, wrecks will happen. These systems are not designed to be used like this; they can stop working at any time, for any number of reasons. They can make bad decisions that require a human to jump in to correct. They are not for this.

I reached out to Tesla for comment, and they pointed me to the same thing they always say in these circumstances, which basically boils down to “don’t do this.” Here’s Tesla’s boilerplate reminder (emphasis mine):

“Autopilot is intended for use only with a fully attentive driver who has their hands on the wheel and is prepared to take over at any time. While Autopilot is designed to become more capable over time, in its current form, it is not a self-driving system, it does not turn a Tesla into an autonomous vehicle, and it does not allow the driver to abdicate responsibility. When used properly, Autopilot reduces a driver’s overall workload, and the redundancy of eight external cameras, radar and 12 ultrasonic sensors provides an additional layer of safety that two eyes alone would not have.”

Advertisement

If a someone wants to do this on a private track and see what happens, have at it. We’ll probably even run the video. But doing this on public roads, with non-idiots driving around with no idea what’s happening, is irresponsible and a terrible idea.

So knock it off, dummies.