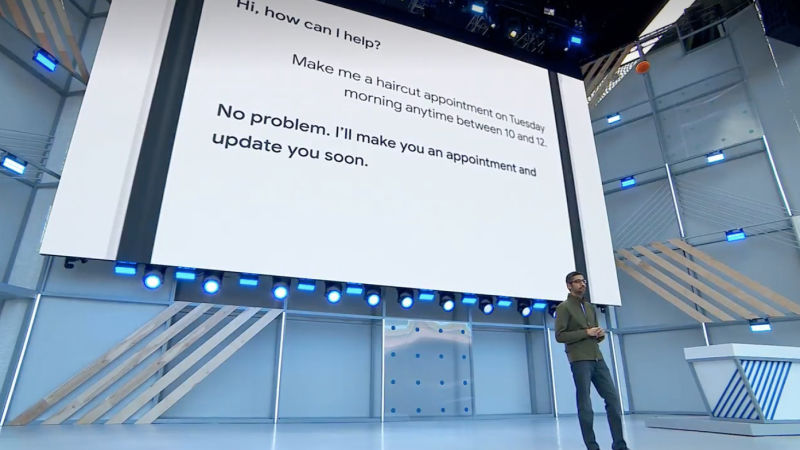

Google could soon have a feature that lets your phone impersonate people—because consumer-facing artificial intelligence isn’t terrifying enough. Called Duplex, it’s intended to make people’s lives easier by handling standard phone calls that are necessary, but not especially personal.

In examples Google demonstrated on stage during the I/O keynote, Google Assistant called a hair stylist to arrange an appointment and called a restaurant to get information about a reservation, using a voice that sounds a little less robotic than the standard Google Assistant (whether that voice is the user’s or a standard Google Duplex voice has not been made clear). And sure that’s ostensibly kind of neat. Intellectually speaking, I am very impressed with this technology! A voice that can contact human beings and impersonate them reasonably well, including using filler words like “um,” is a remarkable feat of AI engineering.

It is also absolutely terrifying. For the scifi fans it’s terrifying because it’s one step closer to the kind of AI we put in robots we enslave and who eventually rebel, because the enslavement of digital intelligence is morally repugnant. There is even a very popular show on HBO right now that digs deep on why pushing for exactly perfect impersonations of humans is a very bad idea. (And if Westworld isn’t your bag you can watch the creator’s last show about the dangers of AI, Persons of Interest, or the excellent AMC show Humans.)

The near future terror of this project has to do with how it could be used to further erode your privacy and security. Google has access to a lot of your information. It knows everything you browse on Chrome, and places you go on Google Maps. If you’ve got an Android device it knows who you call. If you use Gmail it knows how regularly you skip chain emails from your mom. Giving an AI that pretends to be human access to all that information should terrify you.

A bad actor could potentially cheat information out of the Duplex assistant in a phone call. Or use the Duplex assistant to impersonate you, making calls and reservations in your name. It’s also, just, you know, an AI that KNOWS YOUR ENTIRE LIFE. You know that vaguely unsettling feeling you had chatting with AIM Bots? This takes it a few steps further and brings you to a precipice that looks a lot like Spike Jonze’s film Her.

Advertisement

But perhaps the most terrifying thing about this is that Google simply refuses to acknowledge it’s on a collision course with a scifi dystopia. As IO kicks off down in Mountain View, Microsoft is winding down its own developer’s conference, Build, in Seattle. In the Day 1 keynote CEO Satya Nadella took a break from bragging about not being in the news for privacy and security flubs by claiming that large companies embracing AI have a moral responsibility to consider the ethics of artificial intelligence. Nadella, and then Day 2 keynote speaker Joe Belfiore alluded to Facebook’s use of AI helping to disrupt the 2016 U.S. election.

Sure, Microsoft was essentially paying lip service to privacy and security concerns after Facebook’s debacle, but that’s more than we’ve heard from Google so far today. It would have been really nice to hear about Duplex after Google first assured me and every other Google user that it was considering the privacy, security, and ethical implications of AI. Instead it has so far seemed content to treat AI as just another feature on your phone. I know how that turns out in the movies.