Mars Rover Curiosity has been on the Red Planet for going on 8 years, but its journey is nowhere near finished — and it’s still getting upgrades. You can help it out by spending a few minutes labeling raw data to feed to its terrain-scanning AI.

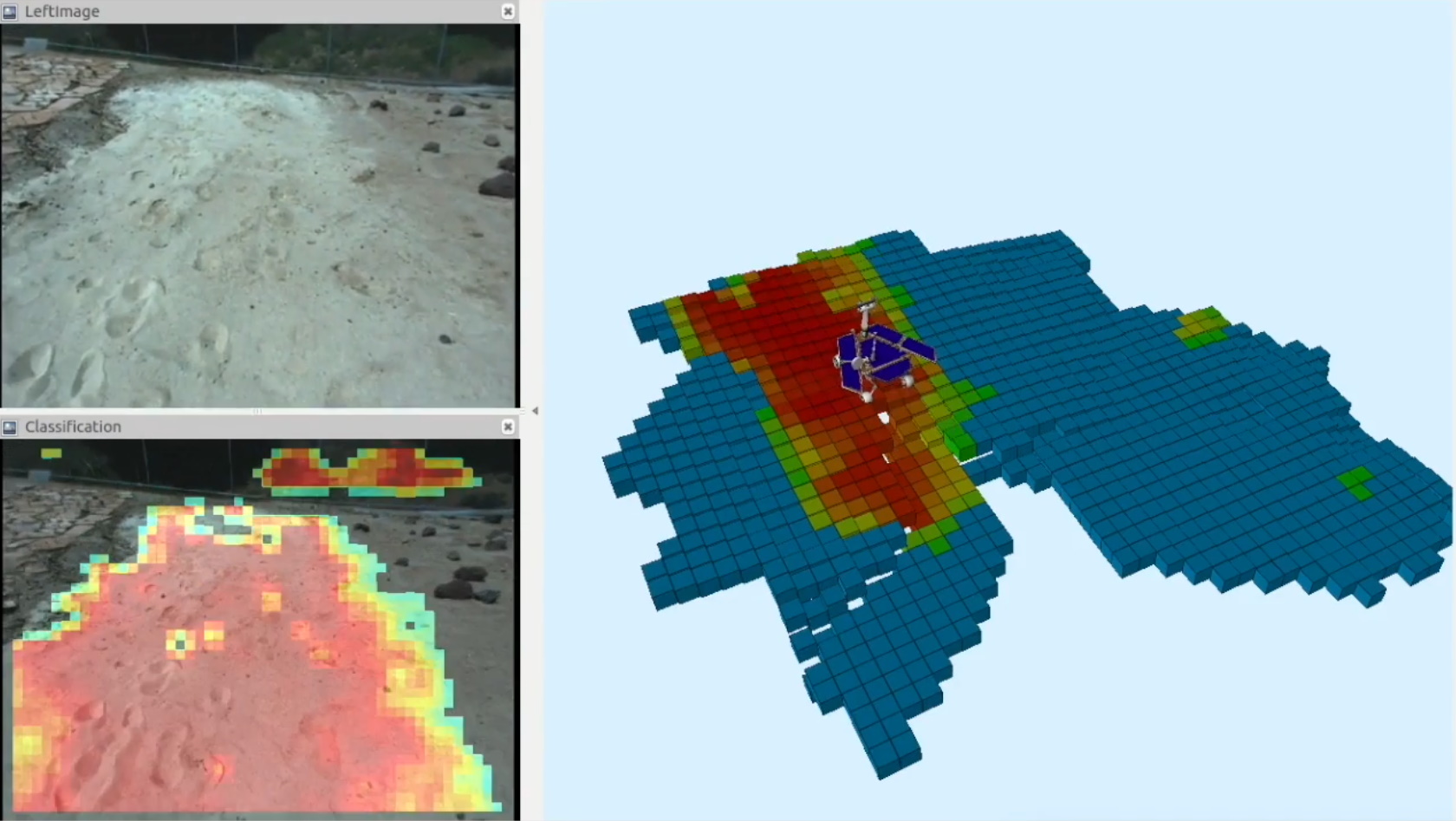

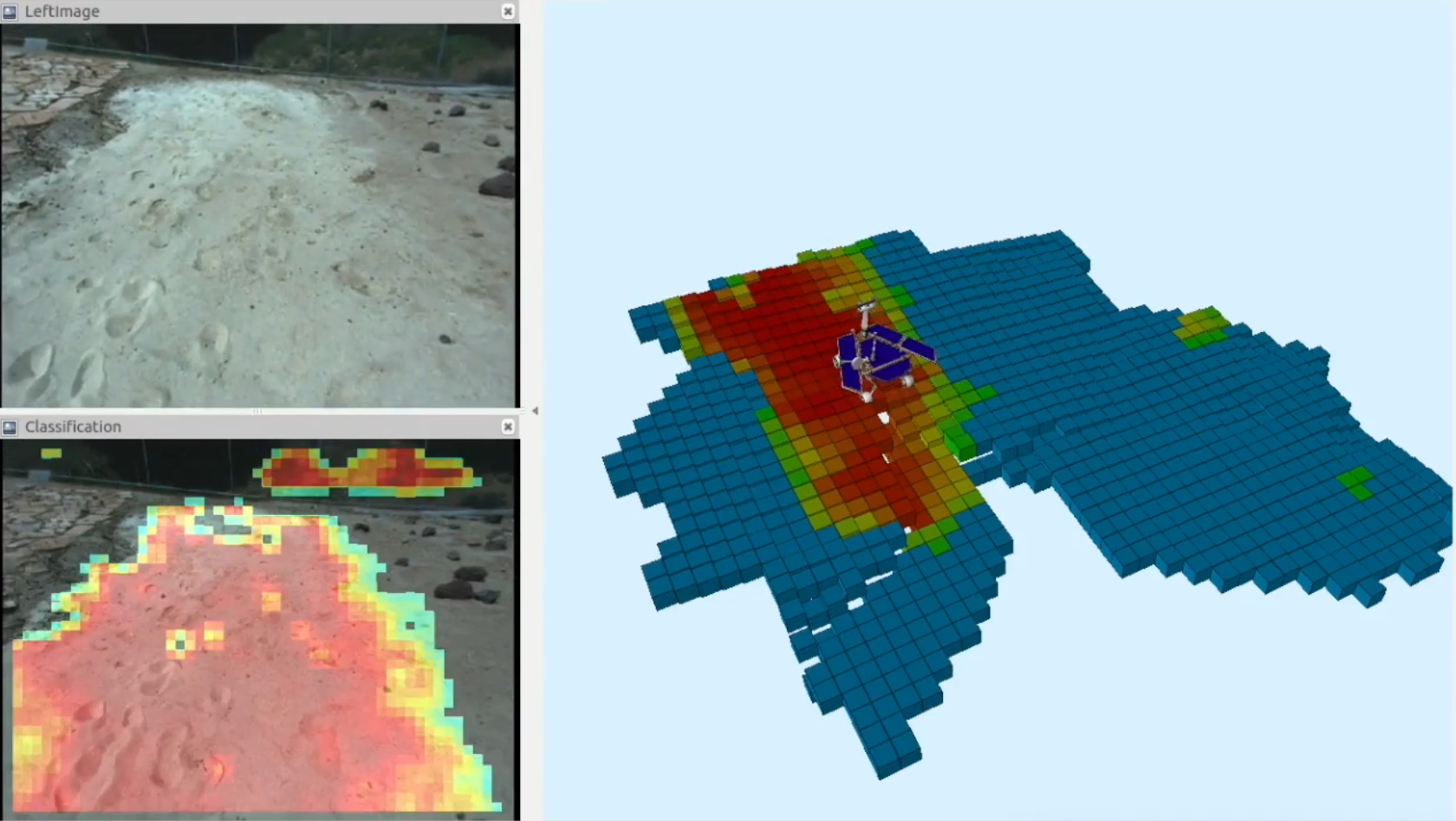

Curiosity doesn’t navigate on its own; There’s a whole team of people on Earth who analyze the imagery coming back from Mars and plot a path forward for the mobile science laboratory. In order to do so, however, they need to examine the imagery carefully to understand exactly where rocks, soil, sand, and other features are.

This is exactly the type of task that machine learning systems are good at: You give them a lot of images with the salient features on them labeled clearly, and they learn to find similar features in unlabeled images.

The problem is that while there’s lots of ready-made datasets of images with faces, cats, and cars labeled, there aren’t many of the Martian surface annotated with different terrain types.

“Typically, hundreds of thousands of examples are needed to train a deep learning algorithm. Algorithms for self-driving cars, for example, are trained with numerous images of roads, signs, traffic lights, pedestrians and other vehicles. Other public datasets for deep learning contain people, animals and buildings — but no Martian landscapes,” said NASA/JPL AI researcher Hiro Ono in a news release.

So NASA is making one, and you can help.

To be precise, they already have an algorithm, called Soil Property and Object Classification or SPOC, but are asking for assistance in improving it.

The agency has uploaded thousands of images from Mars to Zooniverse, and anyone can take a few minutes to annotate them — after reading through the tutorial, of course. It may not sound that difficult to draw shapes around rocks, sandy stretches and so on but you may, as I did, immediately run into trouble. Is that a “big rock” or “bedrock”? Is it more than 50 centimeters wide? How tall is it?

So far the project has labeled about half of the nearly 9,000 images it wants to get done (with more perhaps to come), and you can help them along to that goal if you have a few minutes to spare — no commitment required. The site is available in English now, with Spanish, Hindi, Japanese and other translations on the way.

Improvements to the AI might let the rover tell not just where it can drive, but the likelihood of losing traction and other factors that could influence individual wheel placement. It also makes things easier for the team planning Curiosity’s movements, since if they’re confident in SPOC’s classifications they don’t have to spend as much time poring over the imagery to double check them.

Keep an eye on Curiosity’s progress at the mission’s webpage.